NASA Technology

In 2008, a NASA effort to standardize its websites inspired a breakthrough in cloud computing technology. The innovation has spurred the growth of an entire industry in open source cloud services that has already attracted millions in investment and is currently generating hundreds of millions in revenue.

William Eshagh was part of the project in the early days, when it was known as NASA.net. “The feeling was that there was a proliferation of NASA websites and approaches to building them. Everything looked different, and it was all managed differently—it was a fragmented landscape.”

NASA.net aimed to resolve this problem by providing a standard set of tools and methods for web developers. The developers, in turn, would provide design, code, and functionality for their project while adopting and incorporating NASA’s standardized approach. Says Eshagh, “The basic idea was that the web developer would write their code and upload it to the website, and the website would take care of everything else.”

NASA.net aimed to resolve this problem by providing a standard set of tools and methods for web developers. The developers, in turn, would provide design, code, and functionality for their project while adopting and incorporating NASA’s standardized approach. Says Eshagh, “The basic idea was that the web developer would write their code and upload it to the website, and the website would take care of everything else.”

Even though the project was relatively narrow in its focus, the developers soon realized that they would need bigger, more foundational tools to accomplish the job. “We were trying to create a ‘platform layer,’ which is the concept of giving your code over to the service. But in order to build that, we actually needed to go a step deeper,” says Eshagh.

Even though the project was relatively narrow in its focus, the developers soon realized that they would need bigger, more foundational tools to accomplish the job. “We were trying to create a ‘platform layer,’ which is the concept of giving your code over to the service. But in order to build that, we actually needed to go a step deeper,” says Eshagh.

That next step was to create an “infrastructure service.” Whereas NASA.net was a platform for dealing with one type of application, an infrastructure service is a more general tool with a simpler purpose: to provide access to computing power. While such computing power could be used to run a service like NASA.net, it could also be used for other applications.

Put another way, what the team came to realize was that they needed to create a cloud computing service. Cloud computing is the delivery of software, processing power, and storage over the Internet. Whether these resources are as ordinary as a library of music files or as complex as a network of supercomputers, cloud computing enables an end user to control them remotely and simply. “The idea is to be able to log on to the service and say ‘I want 10 computers,’ and within a minute, I can start using those computers for any purpose whatsoever,” says Eshagh.

As the scope of the project expanded, NASA.net came to be known as Nebula. Much more than setting standards for Agency web developers, Nebula was intended to provide NASA developers, researchers, and scientists with a wide range of services for accessing and managing the large quantities of data the Agency accumulates every day. This was an enormous undertaking that only a high-powered cloud computing platform could provide.

Raymond O’Brien, former program manager of Nebula, says the project was in some ways ahead of its time. “Back in 2008 and 2009, people were still trying to figure out what ‘cloud’ meant. While lots of people were calling themselves ‘cloud enabled’ or ‘cloud ready,’ there were few real commercial offerings. With so little clarity on the issue, there was an opportunity for us to help fill that vacuum.”

As the team built Nebula, one of the most pressing questions they faced was that of open source development, or the practice of building software in full view of the public over the Internet.

As the team built Nebula, one of the most pressing questions they faced was that of open source development, or the practice of building software in full view of the public over the Internet.

On the one hand, proprietary code might have helped the project overcome early hurdles, as commercial software can offer off-the-shelf solutions that speed up development by solving common problems. Proprietary software is sometimes so useful and convenient that the Nebula team wasn’t even sure that they could create the product without relying on closed source solutions at some point.

On the other hand, open source development would facilitate a collaborative environment without borders—literally anyone with the know-how and interest could access the code and improve on it. Because Nebula had evolved into a project that was addressing very general, widespread needs—not just NASA-wide, but potentially worldwide—the possibility of avoiding restrictive licensing agreements by going open source was very attractive.

O’Brien says that broad appeal was an important part of Nebula’s identity. “From the beginning, we wanted this project to involve a very large community—private enterprises, academic institutions, research labs—that would take Nebula and bring it to the next level. It was a dream, a vision. It was that way from the start.”

Despite uncertainties, the development team decided to make Nebula purely open source. Eshagh says the real test for that philosophy came when those constraints were stretched to their limits. “Eventually, we determined that existing open source tools did not fully address Nebula’s requirements,” he says. “But instead of turning to proprietary tools, we decided to write our own.”

The problem was with a component of the software called the cloud controller, or the tool that can turn a single server or pool of servers into many virtual servers, which can then be provisioned remotely using software. In effect, the controller gives an end user access in principle to as much or as little computing power and storage as is needed. Existing tools were either written in the wrong programming language or under the wrong software license.

Within a matter of days, the Nebula team had built a new cloud controller from scratch, in Python (their preferred programming language for the controller), and under an open source license. When the team announced this breakthrough on its blog, they immediately began attracting attention from some of the biggest players in the industry. “We believed we were addressing a general problem that would have broad interest,” says Eshagh. “As it turns out, that prediction couldn’t have been more accurate.”

Technology Transfer

Rackspace Inc., of San Antonio, Texas, was one of the companies most interested in the technology. Rackspace runs the second largest public cloud in the world and was at the time offering computing and storage services using software they had created in-house. Jim Curry, general manager of Rackspace Cloud Builders, says they faced hurdles similar to those NASA faced in building a private cloud. “We tried to use available technology,” he says, “but it couldn’t scale up to meet our needs.”

The engineers at Rackspace wrote their own code for a number of years, but Curry says they didn’t see it as a sustainable activity. “We’re a hosting company—people come to us when they want to run standard server environments with a high level of hosting support that we can offer them. Writing proprietary code for unique technologies is not something we wanted to be doing long-term.”

The developers at Rackspace were fans of open source development and had been looking into open source solutions right at the time the Nebula team announced its new cloud controller. “Just weeks before we were going to announce our own open source project, we saw that what NASA had released looked very similar to what we were trying to do.” Curry reached out to the Nebula team, and within a week the two development teams met and agreed that it made sense to collaborate on the project going forward.

Each of the teams brought something to the table, says Curry. “The nice thing about it was that we were more advanced than NASA in some areas and vice versa, and we each complemented the other very well. For example, NASA was further along with their cloud controller, whereas we were further along on the storage side of things.”

The next step was for each organization to make its code open source so the two teams could launch the project as an independent, open entity. Jim Curry says the team at Rackspace was stunned by the speed at which NASA moved through the process. “Within a period of 30–45 days, NASA completed the process of getting the agreements to have this stuff done. From my perspective, they moved as fast as any company I’ve ever worked with, and it was really impressive to watch.”

The OpenStack project, the successor to Nebula with development from Rackspace, was announced in July 2010. As open source software, OpenStack has attracted a very broad community: nearly 2,500 independent developers and 150 companies are a part of it—including such giants as AT&T, HP, Cisco, Dell, and Intel. Semi-annual developers’ conferences, where members of the development community meet to exchange ideas and explore new directions for the software, now attract over 1,000 participants from about two dozen different countries.

Benefits

Because OpenStack is free, companies who use it to deploy servers do not need to pay licensing fees—fees that can easily total thousands of dollars per server per year. With the number of companies that have already adopted OpenStack, the software has potentially saved millions of dollars in server costs.

“Before OpenStack,” says Curry, “your only option was to pay someone money to solve the problem that OpenStack is addressing today. For people who want it as a solution, who like the idea of consuming open source, they now have an alternative to proprietary options.”

Not only is OpenStack saving money; it is also generating jobs and revenue at a remarkable pace. Curry says that dozens of Rackspace’s 80 cloud engineering jobs are directly attributable to OpenStack, and that the technology has created hundreds of jobs throughout the industry. “Right now, trying to find someone with OpenStack experience, especially in San Francisco, is nearly impossible, because demand is so high.”

The technology is currently generating hundreds of millions in revenue: Rackspace’s public cloud alone— which largely relies on OpenStack—currently takes in $150 million a year. Curry, Eshagh, and O’Brien all predict that the software will be its own billion-dollar industry within a few years.

Because OpenStack is open source, and is modified and improved by the people who use it, it is more likely to remain a cutting-edge solution for cloud computing needs. Says Eshagh, “We are starting to see the heavyweights in the industry adding services on top of OpenStack—which they can do because they have a common framework to build from. That means we’ll see even more services and products being created.”

In 2012, Rackspace took steps to secure OpenStack’s future as a free and open source project: the company began the process of spinning off the platform into its own nonprofit organization. By separating itself from any one commercial interest, Curry says, the project will be better positioned to continue doing what its founders hoped it would.

O’Brien maintains that OpenStack’s potential is far from being realized. “It’s hard to characterize in advance. If you had asked an expert about Linux years ago, who could have predicted that it would be in nearly everything, as it is today? It’s in phones and mobile devices. It’s in 75 percent of deployed servers. It’s even used to support space missions. OpenStack has a chance to hit something similar to that in cloud computing.”

Curry agrees: “In the future, you can envision almost all computing being done in the cloud, much of which could be powered by OpenStack. I think that NASA will need to receive significant credit for that in the history books. What we’ve been able to do is unbelievable— especially when you remember that it all started in a NASA lab.”

[Source]

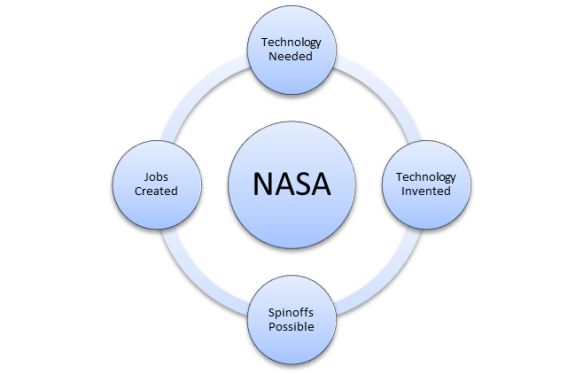

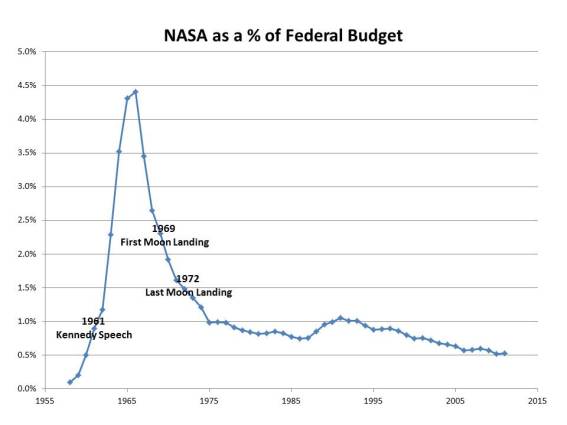

The point to spending on the space program, for example, is shown by the timeline of spinoff technologies [1]. I’m preparing the fiscal numbers using the information posted on this blog to look into asking, “What is the financial effect of science and technology funding?”, so the Apollo program is a perfect example for looking into this question. As discussed in the timeline, Apollo led to “cool suits alleviate dangers from high-heat environments and medical conditions, Kidney dialysis machines remove toxic waste from used dialysis fluid, A machine aids physical therapy and athletic development, A stress-free ‘blow molding’ process manufactures athletic shoes, Communities benefit from water purification technology, Manufacturers preserve food through freeze-drying, and Sensors detect hazardous gasses.”

The point to spending on the space program, for example, is shown by the timeline of spinoff technologies [1]. I’m preparing the fiscal numbers using the information posted on this blog to look into asking, “What is the financial effect of science and technology funding?”, so the Apollo program is a perfect example for looking into this question. As discussed in the timeline, Apollo led to “cool suits alleviate dangers from high-heat environments and medical conditions, Kidney dialysis machines remove toxic waste from used dialysis fluid, A machine aids physical therapy and athletic development, A stress-free ‘blow molding’ process manufactures athletic shoes, Communities benefit from water purification technology, Manufacturers preserve food through freeze-drying, and Sensors detect hazardous gasses.”